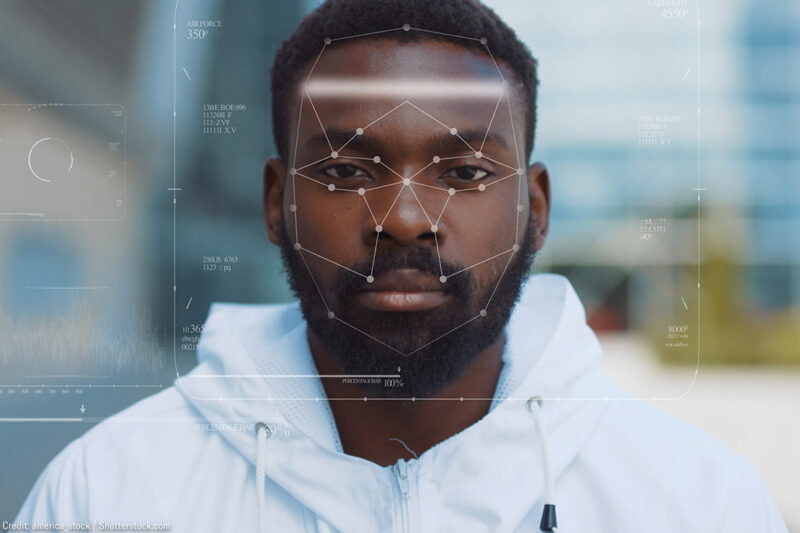

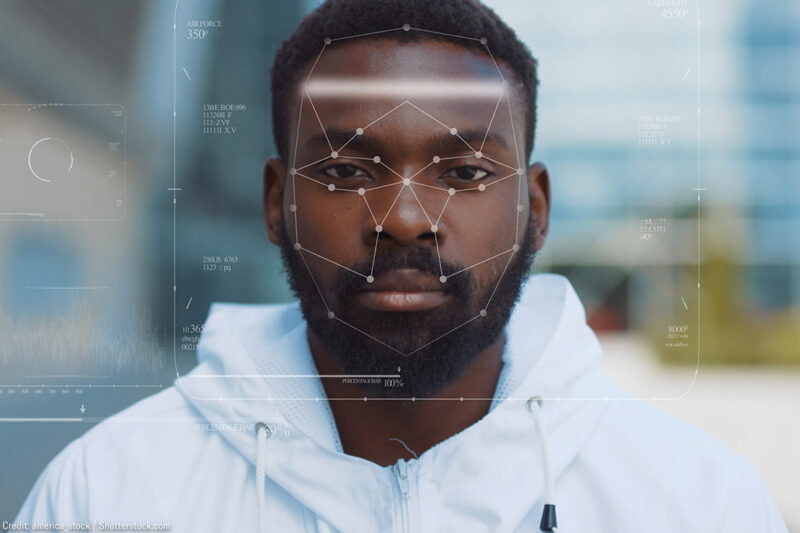

How is Face Recognition Surveillance Technology Racist?

Last week, IBM, Amazon, and Microsoft announced they would pause or end sales of their face recognition technology to police in the United States. The announcement caught many by surprise. For years, racial justice and civil rights advocates had been warning that this technology in law enforcement hands would be the end of privacy as we know it. It would supercharge police abuses, and it would be used to harm and target Black and Brown communities in particular.

But the companies ignored these warnings and refused to get out of this surveillance business. It wasn’t until there was a national reckoning over anti-Black police violence and systemic racism, and these companies getting caught in activists’ crosshairs for their role in perpetuating racism, that the tech giants conceded — even if only a little.

But why did IBM, Amazon, and Microsoft’s sale of face recognition to cops make them a target of the Black Lives Matter movement? How is face surveillance an anti-Black technology?

Face surveillance is the most dangerous of the many new technologies available to law enforcement. And while face surveillance is a danger to all people, no matter the color of their skin, the technology is a particularly serious threat to Black people in at least three fundamental ways.

First, the technology itself can be racially biased. Groundbreaking research conducted by Black scholars Joy Buolamwini, Deb Raji, and Timnit Gebru snapped our collective attention to the fact that yes, algorithms can be racist. Buolamwini and Gebru’s 2018 research concluded that some facial analysis algorithms misclassified Black women nearly 35 percent of the time, while nearly always getting it right for white men. A subsequent study by Buolamwini and Raji at the Massachusetts Institute of Technology confirmed these problems persisted with Amazon’s software.

Late last year, the federal government released its own damning report on bias issues in face recognition algorithms, finding that the systems generally work best on middle-aged white men’s faces, and not so well for people of color, women, children, or the elderly. The federal government study concluded the rates of error tended to be highest for Black women, just as Buolamwini, Gebru, and Raji found. These error-prone, racially biased algorithms can have devastating impacts for people of color. For example, many police departments use face recognition technology to identify suspects and make arrests. One false match can lead to a wrongful arrest, a lengthy detention, and even deadly police violence.

Second, police in many jurisdictions in the U.S. use mugshot databases to identify people with face recognition algorithms. But using mugshot databases for face recognition recycles racial bias from the past, supercharging that bias with 21st century surveillance technology.

Across the U.S., Black people face arrest for a variety of crimes at far higher rates than white people. Take cannabis arrests, for just one example. Cannabis use rates are about the same for white and Black people, but Black people are nearly four times more likely to be arrested for marijuana possession than white people. Each time someone is arrested, police take a mugshot and store that image in a database alongside the person’s name and other personal information. Since Black people are more likely to be arrested than white people for minor crimes like cannabis possession, their faces and personal data are more likely to be in mugshot databases. Therefore, the use of face recognition technology tied into mugshot databases exacerbates racism in a criminal legal system that already disproportionately polices and criminalizes Black people.

Third, even if the algorithms are equally accurate across race, and even if the government uses driver’s license databases instead of mugshot systems, government use of face surveillance technology will still be racist. That’s because the entire system is racist. As journalist Radley Balko has carefully documented, Black people face overwhelming disparities at every single stage of the criminal punishment system, from street-level surveillance and profiling all the way through to sentencing and conditions of confinement.

Surveillance of Black people in the U.S. has a pernicious and largely unaddressed history, beginning during the antebellum era. Take 18th century lantern laws, for example. As scholar Simone Browne observed: “Lantern laws were 18th century laws in New York City that demanded that Black, mixed-race and Indigenous enslaved people carry candle lanterns with them if they walked about the city after sunset, and not in the company of a white person. The law prescribed various punishments for those that didn’t carry this supervisory device.”

Today, police surveillance cameras disproportionately installed in Black and Brown neighborhoods keep a constant watch.

The white supremacist, anti-Black history of surveillance and tracking in the United States persists into the present. It merely manifests differently, justified by the government using different excuses. Today, those excuses generally fall into two categories: spying that targets political speech, too often conflated with “terrorism,” and spying that targets people suspected of drug or gang involvement.

In recent years, we learned of an FBI surveillance program targeting so-called “Black Identity Extremists,” which appears to be the bureau’s way of justifying domestic terrorism investigations of Black Lives Matter activists. Local police are involved in anti-Black political surveillance, too. In Boston, documents revealed the police department was using social media surveillance technology to track the use of the phrase “Black Lives Matter” online. In Memphis, police have spied on Black activists and journalists in violation of a 1978 consent decree. The Memphis Police Department’s surveillance included the use of undercover operations on social media targeting people engaged in First Amendment-protected activity. In New York, the police spent countless hours monitoring Black Lives Matter protesters, emails show. And in Chicago, activists suspect the police used a powerful cell phone spying device to track protesters speaking out against police harassment of Black people.

These are just a few examples of a trend that dates back to the surveillance of Black people during slavery, extending through the 20th century when the FBI’s J. Edgar Hoover instructed his agents to track the political activity of every single Black college student in the country. It continues to this day, with Attorney General Bill Barr reportedly temporarily expanding the U.S. Drug Enforcement Administration's surveillance authorities that could easily be abused to spy on individuals protesting the police killing of George Floyd.

The war on drugs and gangs is the other primary justification for surveillance programs that overwhelmingly target Black and Brown people in the U.S. From wiretaps to sneak-and-peak warrants, the most invasive forms of authorized government surveillance are typically deployed not to fight terrorism or investigate violent criminal conspiracies like murder or kidnapping, but rather to prosecute people for drug offenses. Racial disparities in the government’s war on drugs are well documented.

To avoid repeating the mistakes of our past, we must read our history and heed its warnings. If government agencies like police departments and the FBI are authorized to deploy invasive face surveillance technologies against our communities, these technologies will unquestionably be used to target Black and Brown people merely for existing. That’s why racial justice organizations like the Center for Media Justice are calling for a ban on the government’s use of this dystopian technology, and why ACLU advocates from California to Massachusetts are pushing for bans on the technology in cities nationwide.

We are at a pivotal moment in our nation’s history. We must listen to the voices of the protesters in the streets and act now to make systemic change. Banning face surveillance won’t stop systemic racism, but it will take one powerful tool away from institutions that are responsible for upholding it.